This post is about writing a high performance dedicated programs which need to make use of all CPU cores and RAM available. It is common knowledge that high-performance software must take advantage of multiple cores as individual cores are not getting faster anymore. However, it is less known that there is a similar story with RAM. Back in the old days, a single memory bus could handle all the load needed. But this is not the case with modern beefy multicore servers. There the memory is divided into independent parts and assigned to groups of CPU cores. Each CPU core can access part of memory assigned to it at full speed, while all the other memory with some performance penalty. This is called a Non-uniform memory access (NUMA).

CPU and OS try to do their best so that NUMA would not impact performance for most programs, but high performance dedicated programs do not fall into this category. Most of the time, dedicated non-NUMA aware program would run significantly slower. How much slower greatly depends on program memory access patterns. I have seen about 20% performance decrease in one case. In other cases there can be even worse performance decrease when a system would start swapping to disk instead of giving free available memory to the program.

Popular database servers usually support NUMA out of the box. If not, the article about MySQL insane swapping provides workarounds using command line tools without changing source code. However, the most performance improvement can be gained by making a program NUMA-aware. This involves properly organizing data and asking OS for making worker threads run on specific NUMA nodes. Modern versions of Windows and Linux both have good APIs to support that.

Reorganizing data

For modern beefy server it is common to have hundreds of gigabytes of state in memory. What matters for NUMA optimization is whether the state can easily be split into independent parts. Say system runs queries on multiple worker threads and each thread can perform any task. In such case, any thread can access any memory location. That is the program is not NUMA-aware and it accesses either local or non-local memory without knowing.

Having a bit of knowledge of how NUMA works, program can be organized in a better way. NUMA introduces a term ‘node’ which can be thought of as part of memory which can be accessed with certain speed. From CPU core perspective there are two kinds of NUMA nodes: local (accessed with maximum speed) and non-local (accessed with some delay).

Large state can be split into independent parts by some application specific criteria so that state which is usually accessed together is stored in the same NUMA node. For example, if it were a social site, all data for a single user (profile, comments, articles, etc.) should probably be stored together. State (data) split does not mean that there can be no data sharing between different NUMA nodes. In practice, it most often will not be the case. As in everything related with optimization, most critical memory structures should be split into independent parts and given special care to be processed independently while not so critical ones processed as usual.

Reorganizing workers

As stated before, it is important that tasks would not run on random CPU core but rather be scheduled on the one which can access the required memory locally (in the fastest way possible).

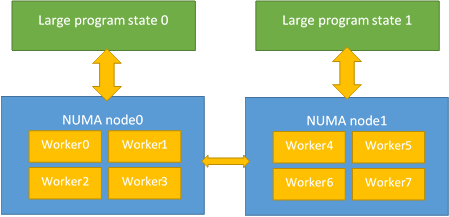

That means there should be not a single pool of threads, but rather a separate pool per each NUMA node. This gives us a full picture on how data and worker threads can be organized in a NUMA-aware application:

We can see that for each NUMA node there is a separate program state and separate worker threads. It is almost as if there were independent processes per each NUMA node. However, memory accesses between different NUMA nodes are also shown with a smaller arrow. Meaning, that every thread can still access the full process address space (but thicker arrows for large program state indicate that most accesses are done to local NUMA node).

Getting started

Linux distributions contain an ‘libnuma’ library which provides the required APIs. Most often a development package, such as libnama-dev will need to be installed. Program will need to #include <numa.h> and link the corresponding library. Also, a function to check if NUMA is available must be called before using any of the other functions:

if (numa_available() == -1)

{

printf("no NUMA support\n");

return 1;

}

Windows provides NUMA API support in kernel32.dll so no additional libraries are required. A common #include <Windows.h> is needed. However, the functions in the following code snippets are available only on Windows 7/Windows Server 2008 R2 or later. It may be needed to load them dynamically if older versions need to be supported as well.

Please note that provided code examples are missing error handling. It must be added in real production code and will probably depend on used framework, etc.

Enumerating NUMA nodes

Both Windows and linux provide APIs to retrieve the maximum number of NUMA nodes on the system and then retrieve information about individual nodes. It is important to take into account that not all nodes may be available for use within the application. So each node must be checked for availability.

Different types are used to represent NUMA nodes on Windows and linux, but most of the time they can be treated transparently (only used to call BindThreadToNumaNode(), introduced later in the post, and not examine the contents), so generic code for both platforms is possible.

std::vector<int> EnumerateNumaNodes()

{

int maxNodes = numa_num_possible_nodes();

std::vector<int> numaNodes;

for (int i = 0; i < maxNodes; i++)

{

if (numa_bitmask_isbitset(numa_all_nodes_ptr, i))

numaNodes.push_back(i);

}

return numaNodes;

}

#include <Windows.h>

#include <vector>

std::vector<GROUP_AFFINITY> EnumerateNumaNodes()

{

ULONG highestNodeNumber;

BOOL r;

r = GetNumaHighestNodeNumber(&highestNodeNumber);

// TODO: Check errors (assigned to 'r' var)

std::vector<GROUP_AFFINITY> numaNodes;

for (ULONG i = 0; i <= highestNodeNumber; i++)

{

GROUP_AFFINITY numaNode;

r = GetNumaNodeProcessorMaskEx((USHORT)i, &numaNode);

if (numaNode.Mask != 0)

{

numaNodes.push_back(numaNode);

}

}

return numaNodes;

}

Binding thread to one of NUMA nodes

Finally, each of the worker threads should be bound to one of the available NUMA nodes. Binding will ensure that memory allocation and execution will only take place on that single NUMA node. And there is no extra effort needed: malloc() or C++ new operator will work as they should. (There are also methods to allocate memory on explicitly specified NUMA nodes if needed, but they are more complicated and can often be avoided).

void BindThreadToNumaNode(int numaNode)

{

struct bitmask * numaBitmask = numa_bitmask_alloc(numa_num_possible_nodes());

numa_bitmask_setbit(numaBitmask, numaNode);

numa_bind(numaBitmask);

numa_bitmask_free(numaBitmask);

}

void BindThreadToNumaNode(const GROUP_AFFINITY & numaNode)

{

BOOL r = SetThreadGroupAffinity(GetCurrentThread(), &numaNode, NULL);

// TODO: Check errors (assigned to 'r' var)

}

Conclusion

Modern beefy servers these days usually provide more than one NUMA node. Existing applications can usually work on them transparently, but with some extra effort they can be made NUMA-aware and perform considerably faster. A simple pattern in this post describes one way how this can be done.

Tried this pattern in your application? Use some other pattern in NUMA-aware application successfully? Please leave a comment.